Data Quality In Crisis

Exploring the plight of economic data quality.

Once, market participants pored over quarterly reports, a handful of key economic indicators, and the occasional whispered rumour. Data came in drips, and decisions were deliberate. Today, information pours in like a firehose. From social media feeds to satellite images, data flows constantly. Yet, in many ways, we may be worse off. The flood is now the noise, and amidst it all, the question lingers: has more data made us smarter or just more confused?

The proliferation of data over the past few decades has fundamentally changed the way markets operate, but the relationship between data availability and data quality has never been more tenuous. While we’ve moved from data scarcity to an era of data overload, the need to distinguish between useful and irrelevant information has become crucial. Today, we wanted to explore how the data revolution is shaping today’s markets, looking back at the past, evaluating the present, and offering a glimpse into the future.

The Evolution

Historical Data: The Era of Scarcity and Precision

In decades past, market data was scarce, but it was trusted. Investors, policymakers, and economists worked with a limited set of indicators; quarterly earnings reports, economic data releases, and surveys. These numbers, though fewer in volume, were seen as accurate and meaningful, and slower, more deliberate decisions drove market behaviour. Information trickled in, and people had time to process it.

Without the constant barrage of data points, decision-making tended to be slower but more thoughtful. Market participants would rely on long-term trends, personal relationships, and intuition. The limited availability of data created a certain reverence for accuracy. Economic reports like the US Bureau of Labor Statistics (BLS) nonfarm payrolls were given ample weight, and market responses were often tempered by the need for careful analysis rather than knee-jerk reactions.

The 1987 crash and the 1970s stagflation era are examples of how economic shocks played out with less real-time data. Decision-making was slower, but the overall market reaction often carried a sense of deliberation, which we now might consider an advantage in a world full of instant reactions.

The Digital Age: The Rise of Real-Time Data and Its Challenges

The turn of the century ushered in an age of digital transformation. The internet, machine learning, and big data analytics created a landscape where data was no longer just scarce—it was abundant. Social media feeds, satellite images, web scraping, and other forms of alternative data flooded markets. The tools to collect and process this data grew exponentially, but so did the complexity of navigating it.

The advent of algorithmic trading and high-frequency trading changed the rules. Markets began to react faster to every data point. And with that speed came the risk of overreaction. While having access to this much data has allowed for smarter, faster decisions in some cases, it has also resulted in data overload. More is not always better. The flood of information, in many cases, has simply made it harder to sift through the noise and identify what’s truly meaningful.

The rise of alternative data such as social sentiment, satellite imagery, and web scraping has had a profound effect on market reactions. As more traders rely on data to make instantaneous decisions, short-term market moves often become driven by incomplete or sometimes unreliable sources of information. This shift from fundamental to data-driven trading has contributed to a landscape where volatility is often amplified by misinterpretations of the numbers.

The Data Problem: An Overload That Skews Markets

The overabundance of data today is not just an inconvenience; it is a significant problem for markets. One of the most striking examples of this is the recent firing of BLS Commissioner Erika McEntarfer by President Donald Trump. The July jobs report, which showed a large downward revision in the previous months’ payroll numbers, became a lightning rod for criticism. Trump alleged, without evidence, that the data had been manipulated, calling it “rigged.” This incident highlighted a fundamental issue: when economic data is not only overwhelming but also questioned, the uncertainty it creates can destabilise markets.

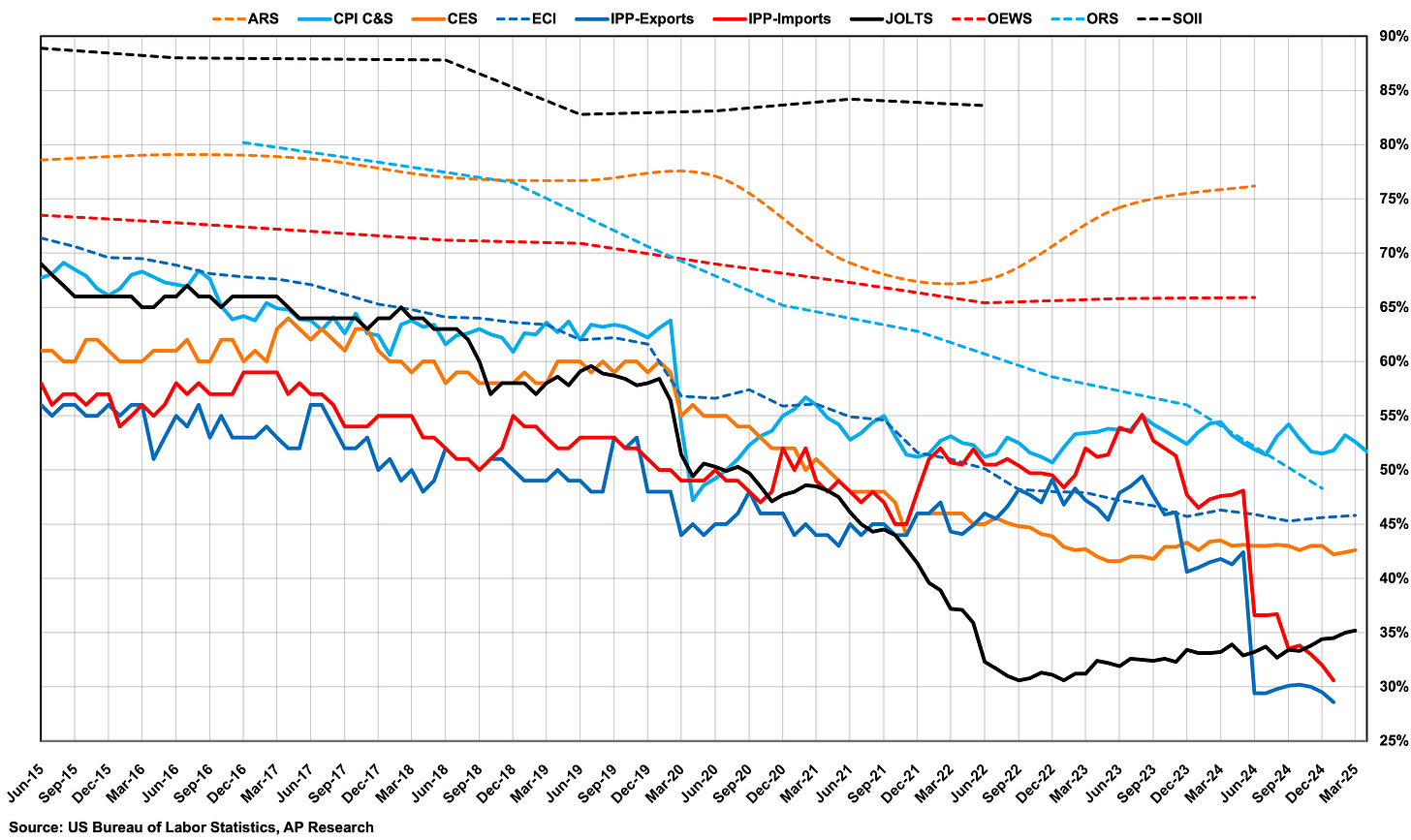

The BLS relies on voluntary surveys to compile its data. With response rates in free fall (down to just 35% for the March report), survey-based data is becoming less reliable. This issue is not isolated to the BLS; it extends across most survey-based data sources. The public’s decreasing willingness to participate in surveys means that the BLS’s job reports are increasingly delayed, and their accuracy is compromised.

E.J. Antoni, the economist nominated yesterday by President Donald Trump to lead the BLS, was vocal in an interview earlier in August about his desire to suspend monthly jobs reports until they become more accurate.

“How on earth are businesses supposed to plan—or how is the Fed supposed to conduct monetary policy—when they don’t know how many jobs are being added or lost in our economy? It’s a serious problem that needs to be fixed immediately.”

“Until it is corrected, the BLS should suspend issuing the monthly job reports but keep publishing the more accurate, though less timely, quarterly data,” he said, adding, “Major decision-makers from Wall Street to D.C. rely on these numbers, and a lack of confidence in the data has far-reaching consequences.”

The problem here is not just the data itself, but the timeliness and reliability of that data. The Federal Reserve and other policymakers are deeply reliant on economic data for their decision-making, but as these numbers become more prone to revision and less accurate, the risk is that critical decisions—such as changes in interest rates—are based on data that is already outdated.

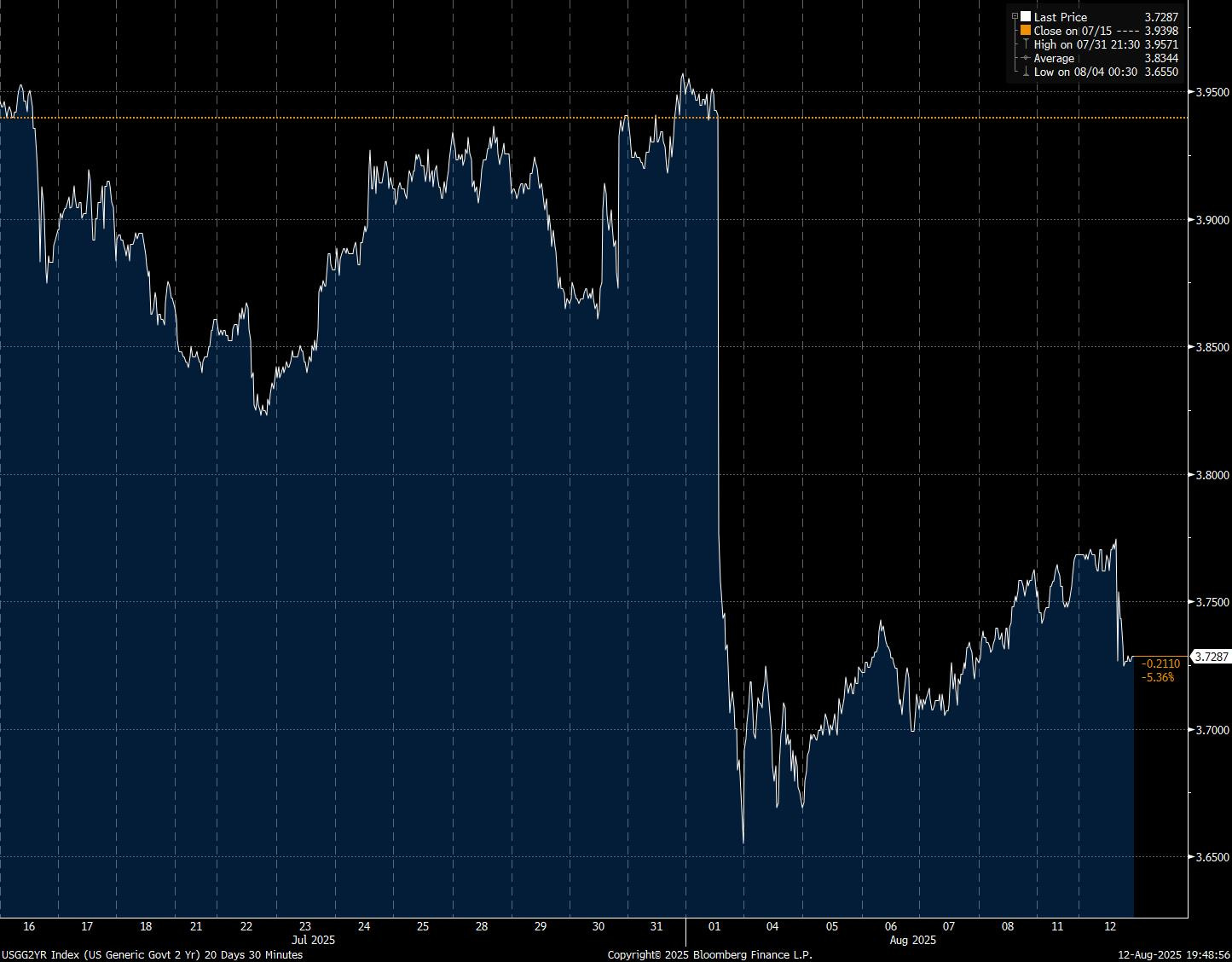

This became evident when the Fed held short-term interest rates steady at its July meeting. Had the BLS provided a more accurate payroll count, there’s a strong possibility that the Fed would have opted for a rate cut in that meeting.

“Inflation isn’t a serious problem right now. Tariffs are a tax hike. Tax increases lower inflation because they lower after-tax income. If the Fed had known the May and June jobs reports were as weak as they were, they could have cut rates by 25 bps in June & July.”—Marc Sumerlin

The bond market reacted immediately to the revised data, with the two-year Treasury yield falling by 0.25 percentage points. This rapid shift underscores the real-world implications of inaccurate economic data: policymakers react to it, markets react to them, and all this volatility arises from a lack of trust in the data.

Rethinking Data: Moving Beyond Surveys and Toward Real-Time Insights

How could data be used in the future? (Not that anyone from the BLS or Fed is reading this article, but just for a thought experiment amongst ourselves and our readers.)

The current system of relying on survey-based data is outdated. Real-time data from sources such as job postings on platforms like Indeed (which closely mirrors BLS data but with less volatility) offer a more accurate and timely picture of the labor market. It’s not just about having more data; it’s about having better data—data that reflects the current reality and can be used to make decisions swiftly.

Leveraging real-time payroll data through tax withholding information could revolutionise how we understand job growth. Public companies already track headcount in real time, and requiring them to report material changes in employment weekly would vastly improve the accuracy of labor data. It would also reduce the time lag between when events occur and when policymakers can act on them.

Furthermore, alternative data sources that track hiring trends in real-time could be integrated into the BLS’s data collection process, allowing for faster and more accurate updates. Payroll processors like ADP already use their data to produce employment reports that align closely with BLS numbers, but they cover a smaller portion of the workforce. Expanding this approach to include more businesses and workers would allow for a much more comprehensive, real-time payroll database.

The Future of Market Data: AI and Beyond

Looking ahead, it is clear that technology must be harnessed to improve the quality of market data. While we now live in an age of data overload, the next step will be in how we process and make sense of it. Machine learning and artificial intelligence (AI) can play a crucial role in filtering out the noise and identifying actionable insights in real time. These technologies could help policymakers and investors alike navigate the overwhelming sea of data and focus on what really matters.

But even with AI, data verification will remain crucial. The future lies not just in collecting data but in verifying its accuracy and ensuring it reflects the true state of the economy. Only through these improvements can we achieve the data quality that markets demand.

The Need for Data Mastery

As the world has moved from data scarcity to data overload, the need for mastery over the flow of information has never been more urgent. The challenge for policymakers and investors is to move beyond simply gathering more data and focus on improving the quality and speed at which data is collected and interpreted.

In this new era, distinguishing between valuable data and noise will be the key to navigating volatility and making informed decisions. The question isn’t whether there’s enough data; it’s whether the data we have is the right data. Without mastering the art of data interpretation, the flood of information will continue to destabilise markets rather than guide them.

AP

loved this article!