Survival of the Leanest

The AI-spending shift.

The AI arms race among big tech companies has primarily focused on developing the most advanced models, acquiring the highest-performing AI chips, and scaling massive cloud infrastructure.

However, a new competitive front is emerging as the industry matures: cutting AI infrastructure and capex spending while maximizing efficiency.

Earlier this week, we saw a tweet put out by Marko Kolanovic, the former chief market strategist at JP Morgan:

We spoke about this concept as a team, with differing views on whether we agreed with him. Given that this is a likely debate that will surface in more conversations in the coming few months, we wanted to put down our thoughts as we currently stand.

Let’s start with the argument for Kolanovic’s view.

The Unsustainable Rise in AI Capex Costs

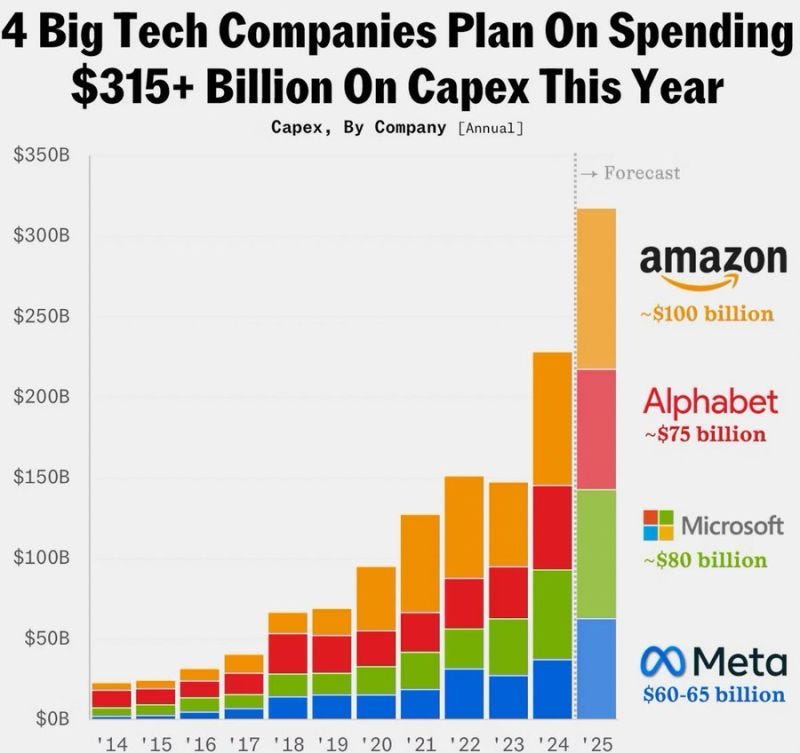

Over the past two years, major tech firms such as Microsoft, Google, Meta, Amazon and Nvidia have invested billions into AI data centres, GPUs, and networking infrastructure. However, these expenditures are reaching unsustainable levels.

Remember, in Davos earlier this year, Microsoft CEO Satya Nadella reinforced his commitment to spend $80n on building AI data centres in the 2025 financial year, a significant increase on the $53bn from last year. Other major tech firms have come out in recent months with similar bold ambitions.

Yet the continued rise in spending is leading to concerns among investors about return on investment and even cash flow implications, given the scale of such output.

We’re seeing early signs that maybe the pivot is starting to happen. The article that Kolanovic cites in his tweet relates to a memo from investment bank TD Cowen that Microsoft has cancelled leases with multiple data centre providers that total a “couple hundred megawatts” of capacity. Exact reasoning hasn’t been provided, but an obvious factor is likely the cost involved.

After all, the initial rush to dominate AI justified heavy spending, but now investors are demanding efficiency. Microsoft isn’t alone in being under pressure to prove that their AI investments can generate profits, not just headline-grabbing breakthroughs.

The Shift Towards AI Efficiency and Optimization

Rather than continually expanding infrastructure, big tech firms are now focused on optimising AI models and reducing compute requirements. The wake-up call for this came with the DeepSeek breakthrough last month, when the Chinese start up announced the development of its AI model, DeepSeek-V3, claiming it was built in just two months at a cost of under $6mn.

Naturally, for something to be produced with a fraction of the AI capex spend of the big U.S. tech giants, eyebrows were raised. In fact, you could argue that was the start of the thought regarding the next stage of the AI race: cost cutting.

There are several ways this can be done in a more efficient manner. For example, experts speculate that DeepSeek may have employed “distillation” methods, wherein a smaller model is trained to replicate the behaviour of a larger, pre-existing model. This approach can significantly reduce training time and costs. Exactly where it stands with legal questions regarding intellectual property is somewhat murky.

Something else that Apple has been pushing hard is to shift AI inference from expensive cloud servers to consumer devices (e.g., Apple’s AI running on iPhones) to reduce cloud infrastructure demand. In fact, with Apple has an edge in AI efficiency without incurring massive cloud infrastructure costs.

Another critical area of focus is improving the efficiency of deployed AI chips and servers. Nvidia sales with new GPU clusters is great, but AI training workloads can be distributed across existing infrastructure instead, providing a cost saving.

Companies like Google and Amazon are designing AI accelerators that reduce dependency on Nvidia’s expensive GPUs. Even though the development here goes against the broader theme of cutting AI capex, if they can be brought online shortly, it should cut longer-term capex spend.

The counter-argument…

Infrastructure is Essential for Long-Term Growth

The statement that the next AI race for big tech companies will be focused on cutting AI spend can be countered on several fronts, but there are two main strands that we reasoned on.